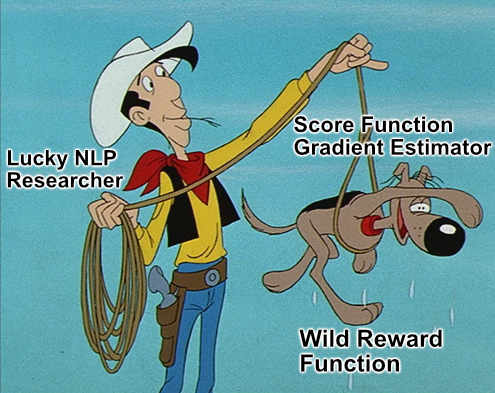

Taming Wild Reward Functions: The Score Function Gradient Estimator Trick

I have written a blog post that explains the need for the score function gradient estimator trick and how it works.

Teaser

MLE is often not enough to train sequence-to-sequence neural networks in NLP. Instead we employ an external metric, which is a reward function that can help us judge model outputs. The parameters of the network are then updated on the basis of the model outputs and corresponding rewards.

For this update, it is necessary to obtain a derivative.

But how can we do this, if the external function is unknown or cannot be derived?

Enter: The score function gradient estimator trick.

Full story.